I spent a day building a video search prototype and came away genuinely surprised. Not by any single model — they’re all “pretty good” on their own — but by what happens when you stack them together.

The constraints compound. A so-so visual match plus a so-so transcript hit often surfaces exactly the right shot. It’s unreasonably effective.

The Setup

I wanted to see how far open-source models could take intelligent video search. The kind of thing where you type “outdoor scene with two people talking about robots” and get useful results.

Test footage: “Tears of Steel” — a 12-minute CC-BY short film from Blender Foundation. VFX, dialog, multiple characters. Good variety.

The goal: stack filters in real-time. Visual content → face → dialog → timecode. See how precisely you can drill down.

Shot Segmentation

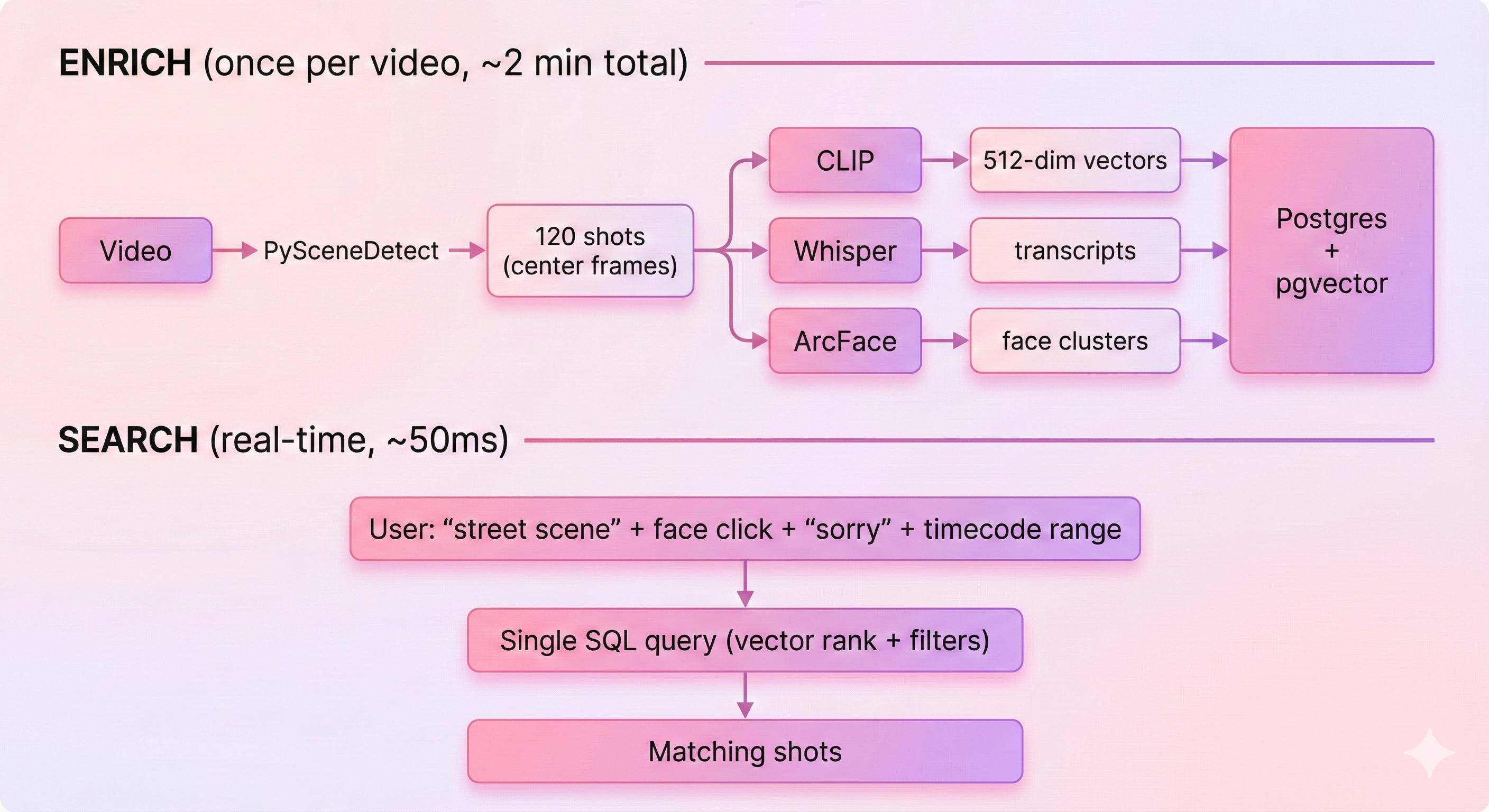

First step: break the video into shots. We’re not embedding every frame — that would be wasteful and slow. Instead:

- Detect shot boundaries using PySceneDetect’s ContentDetector (analyzes frame-to-frame differences to find cuts)

- Extract a representative thumbnail for each shot — just the center frame in this prototype

- Run models on that single thumbnail per shot

For “Tears of Steel,” this gave ~120 shots from 12 minutes. Each shot gets one CLIP embedding, one face detection pass, and the transcript segments that overlap its timecode range.

This keeps compute reasonable and mirrors how editors actually think — in shots, not frames.

The Three Models

All open-source, all running locally on a MacBook Air (M3). No cloud inference.

CLIP (ViT-B-32) — The Swiss Army knife. Embed images and text into the same vector space, then compare. “Street scene” finds street scenes. “Green tones” finds green-graded shots. “Credits” finds credits. One model, endless queries. (CLIP embeddings also turn out to be surprisingly effective at detecting AI-generated images.)

- Enrichment: ~30ms per shot (single thumbnail)

Whisper (base) — Speech to timestamped transcript. Runs on the full audio track, then segments are linked to shots by timestamp overlap.

- Enrichment: ~45s for the full 12-minute video

ArcFace (buffalo_l via InsightFace) — Face detection and embedding on the representative thumbnail. Click a face, find all other shots with that person. No identification needed, just clustering by visual similarity.

- Enrichment: ~100ms per shot (single thumbnail)

That’s it. Three models. The magic is in how they combine.

Why Stacking Works So Well

Each filter alone returns “roughly right” results. But stack two or three and the precision jumps dramatically.

Example workflow from the demo:

- Search “street scene, couple” → ~15 shots

- Click “Match” on an interesting frame → visually similar shots

- Click a face → only shots with that character

- Add “sorry” to dialog search → 2 results, both exactly right

Each step cuts the noise. By the end, you’re looking at exactly what you wanted.

The same principle works for color. CLIP understands “green tones” or “warm sunset” without any separate color extraction. Add a face filter on top and you get “shots of this character in warm lighting.” No custom code for that combination — it just falls out of the architecture.

The Architecture

Deliberately simple. ~500 lines of Python.

The key insight: PostgreSQL + pgvector stores everything in one place. Embeddings, transcripts, face clusters, timestamps — all in the same database.

This means a single SQL query can:

- Filter on metadata (timecode range, face cluster)

- Rank by vector similarity (CLIP embedding distance)

- Full-text search on transcripts

No need to query multiple systems and merge results. One query, one round trip.

SELECT scene_index, timestamp, filepath,

1 - (clip_embedding <=> $1) as similarity

FROM scenes

WHERE ($2 IS NULL OR face_cluster_id = $2)

AND ($3 IS NULL OR transcript ILIKE '%' || $3 || '%')

AND timestamp BETWEEN $4 AND $5

ORDER BY clip_embedding <=> $1

LIMIT 50;That’s filtering by face, dialog, and timecode while ranking by visual similarity — in one query.

The Claude Code Part

I’ll be honest: this prototype exists because of Claude Code with Opus 4.5.

It wasn’t a “write me a video search app” one-shot. It was a day-long collaboration:

- I described the architecture and models I wanted

- Claude scaffolded the project structure

- I’d test, hit issues, describe what was wrong

- Claude would debug, refactor, improve

- Repeat

The iteration speed is what made this feasible. In one day I went from “I wonder if this would work” to a working demo I’m proud to show people. That used to be a week of wrestling with documentation and Stack Overflow.

The code isn’t perfect. There are rough edges. But the core insight — stacking small models — is validated. That’s what a prototype is for.

What’s Next

This was 12 minutes of footage. Not a scale test. But the results are promising enough that I want to push further:

- More models: Object detection (YOLO/SAM), OCR for on-screen text, audio classification

- Longer content: Feature-length films, dailies from real productions

- NLP layer: Parse “outdoor shots with the main character talking about technology” into structured filters

Each additional model adds another dimension to filter on. The architecture supports it — just add another column and another filter clause.

The Takeaway

If you’re building search over any media type, don’t sleep on model stacking. A 512-dim CLIP embedding, a transcript, and a face cluster ID — three simple signals — combine into search that feels intelligent.

The models are all open-source. The infrastructure is Postgres with an extension. The frontend is vanilla HTML/JS. None of this is exotic.

The magic is in the combination.

Jason Peterson

Jason Peterson