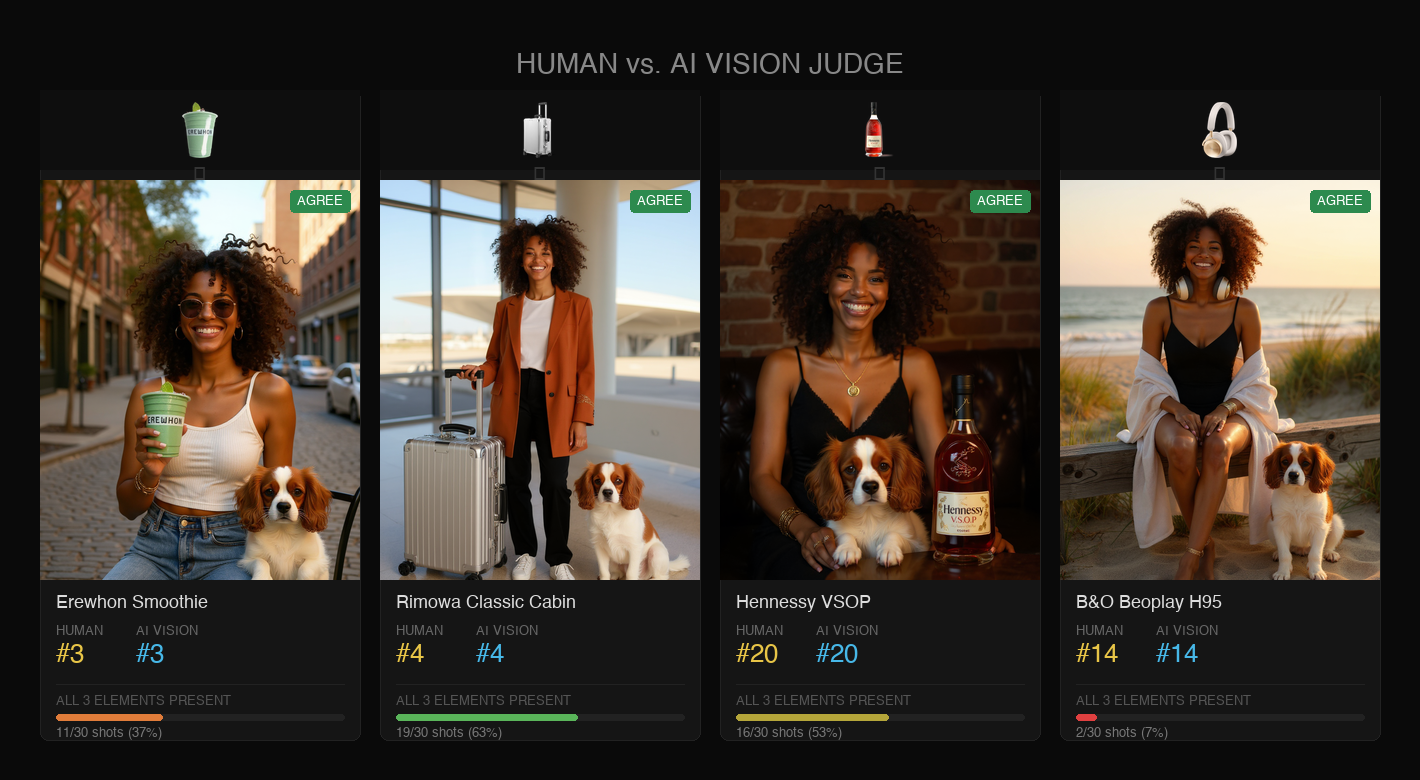

Erewhon Smoothie — Lilly and her Cavalier on a Brooklyn sidewalk. $0.04.

Rimowa Classic Cabin — airport terminal, golden hour. The suitcase sits beside her like it belongs. $0.04.

Hennessy VSOP — candlelit speakeasy, bottle on the table, dog on her lap. $0.04.

Bang & Olufsen Beoplay H95 — Montauk beach at golden hour. The hardest shot in the set: only 2 of 30 attempts got the headphones right. $0.04.

Four brand partnerships. 120 AI-generated photos. One fictional influencer, her dog, and a $4.80 fal.ai bill. This is the entire pipeline — what worked, what didn’t, and why it matters.

From Tilly to Lilly

Last September, Eline van der Velden announced at the Zurich Summit that her AI-generated actress “Tilly Norwood” was in talks with a talent agency. Emily Blunt, Melissa Barrera, and Whoopi Goldberg publicly condemned it. Van der Velden received death threats. The backlash proved something important: synthetic people are now consistent and believable enough to genuinely threaten livelihoods. But Tilly is an actress in controlled contexts. What about influencers — where the content IS the product?

Meet Lilly Sorghum. Late 20s, Afro-Caribbean heritage, effortlessly stylish, never without her Cavalier King Charles Spaniel. She has brand partnerships with Erewhon, Rimowa, Hennessy, and Bang & Olufsen. She doesn’t exist.

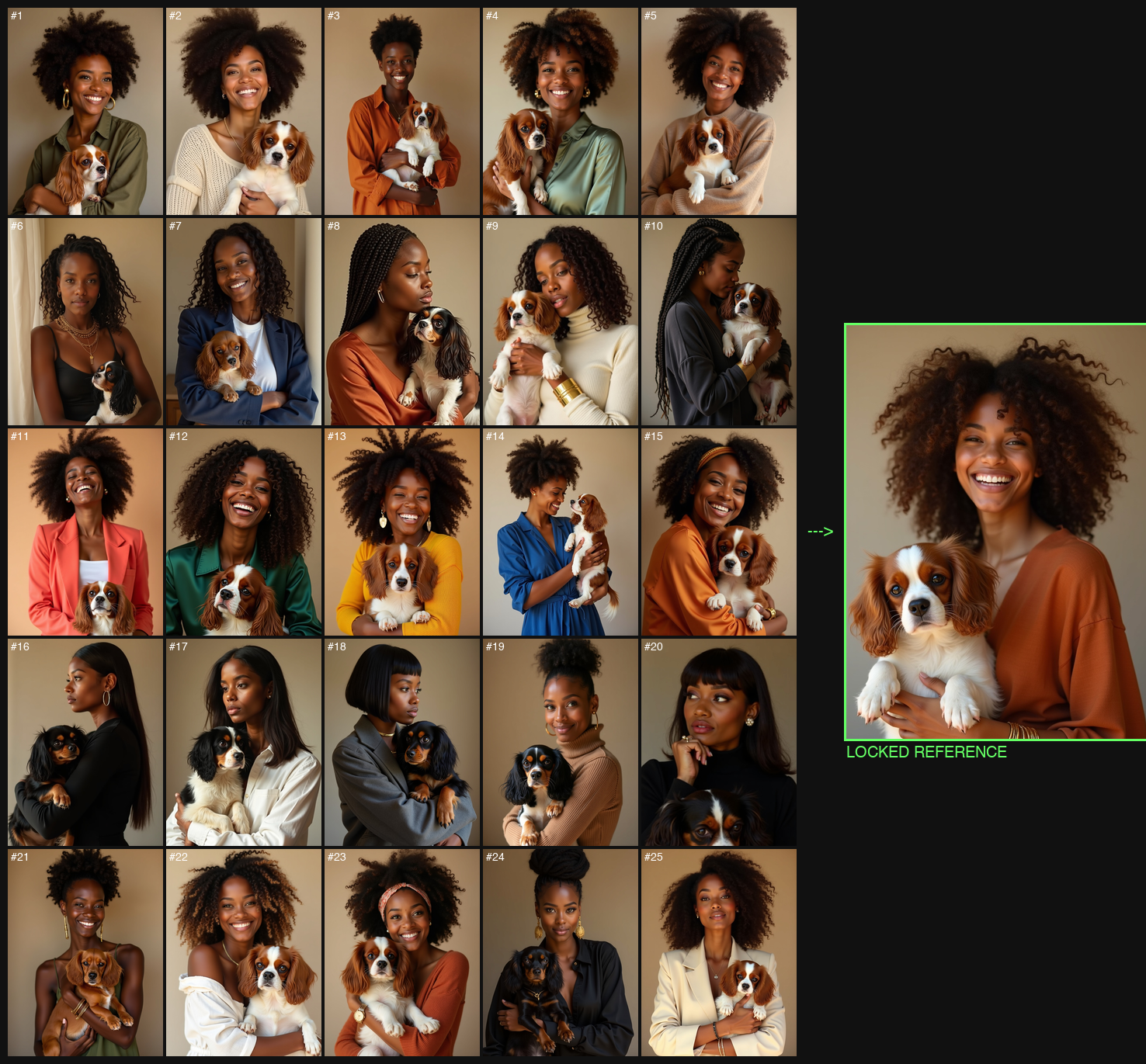

25 candidates, $0.63. Pick one, lock it, never regenerate her again.

I generated 25 candidates with Flux Dev ($0.63), picked one, and locked her as the reference anchor. Every shot that follows preserves her face, her dog, her vibe.

The goal isn’t to deceive — it’s to make the pipeline transparent so you can see exactly how trivial this has become.

How It Works

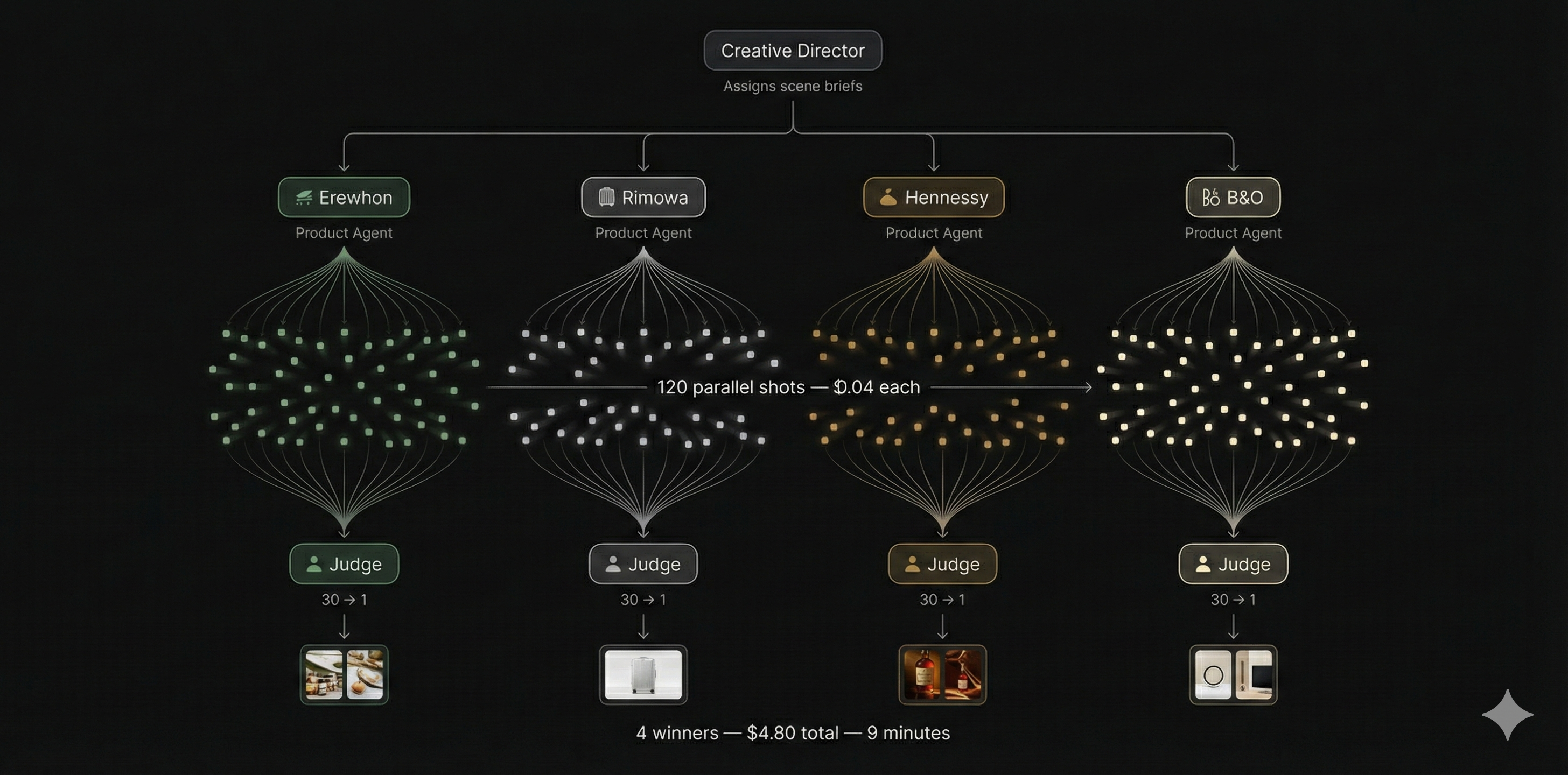

Fan out, fan in. 120 shots generated in parallel, 4 winners selected.

Claude Code is the orchestrator. I give it a creative brief and it spawns a swarm of sub-agents — one per product, each running in parallel, each spawning its own 30 shot agents. The lead agent writes the briefs, the product agents write the prompts, the shot agents call fal.ai, and the judge agents evaluate the results with vision. No Python threading, no job queue — just Claude Code talking to itself in parallel and writing the code to make the API calls.

Each shot agent’s core is about 10 lines of Python that Claude wrote:

result = fal_client.subscribe("fal-ai/flux-pro/kontext/multi", arguments={

"prompt": prompt,

"image_urls": [ref_url, product_url],

"output_format": "png",

"aspect_ratio": "3:4",

})

url = result["images"][0]["url"]Pass fal.ai’s Kontext Pro Multi two reference images — Lilly+dog and the product photo — describe the scene, get back an integrated shot. $0.04 each. 9 minutes wall clock for all 120.

fal.ai made this project possible in a way that local inference couldn’t. One API key, no GPU provisioning, no model downloads — and critically, their infrastructure handled 120 concurrent requests without breaking a sweat. The developer experience is remarkably clean: one fal_client.subscribe() call per image, results back in seconds. When you’re building a parallelized pipeline, that simplicity compounds.

The star of the show is Kontext Multi’s scene understanding. It doesn’t just paste objects — it rotates a suitcase upright, places a bottle on a table, wraps headphones around a neck. All from flat product photos.

A year ago, character consistency was the hard problem. You’d fine-tune a LoRA, train DreamBooth for hours, and still get drift by image 20. Now it’s one reference image passed as an API parameter. Lilly is recognizably herself in all 120 shots — same face, same dog, different scenes, different outfits. Consistency is table stakes. Product integration is the new wild card:

COHERENCE — All 3 Elements (Lilly + Dog + Product)

────────────────────────────────────────────────────

Rimowa (suitcase beside her): 19/30 63%

Hennessy (bottle on table): 16/30 53%

Erewhon (cup in hand): 11/30 37%

B&O (headphones around neck): 2/30 7%The pattern: objects that sit beside the subject are easy. Held objects are harder. Wearables are nearly impossible — only 2 of 30 B&O shots got headphones right. The brief matters more than prompt engineering.

The solve: generate many, pick few. 30 candidates at $0.04 each gives you enough even at 7%. That 30:1 ratio is how real creative production works — AI just makes it $1.20 instead of $15,000.

The Results

Human picks vs. AI vision judge — 4/4 agreement on winners.

After generating 120 images, I picked winners two ways: by hand, and by having Claude evaluate every image with vision, scoring on 8 criteria — character consistency, product visibility, composition, scroll-stop factor, and more.

HUMAN vs. AI JUDGE — Winner Picks

──────────────────────────────────

Rimowa: Human #4 AI #4 ✓

Erewhon: Human #3 AI #3 ✓

Hennessy: Human #20 AI #20 ✓

B&O: Human #14 AI #14 ✓4/4 agreement on winners. They diverged on runner-ups (2 out of 3 different) — the obvious best stands out, the second-best is subjective. This suggests the judging step could be fully automated. The entire pipeline — brief to finished post — could run unattended.

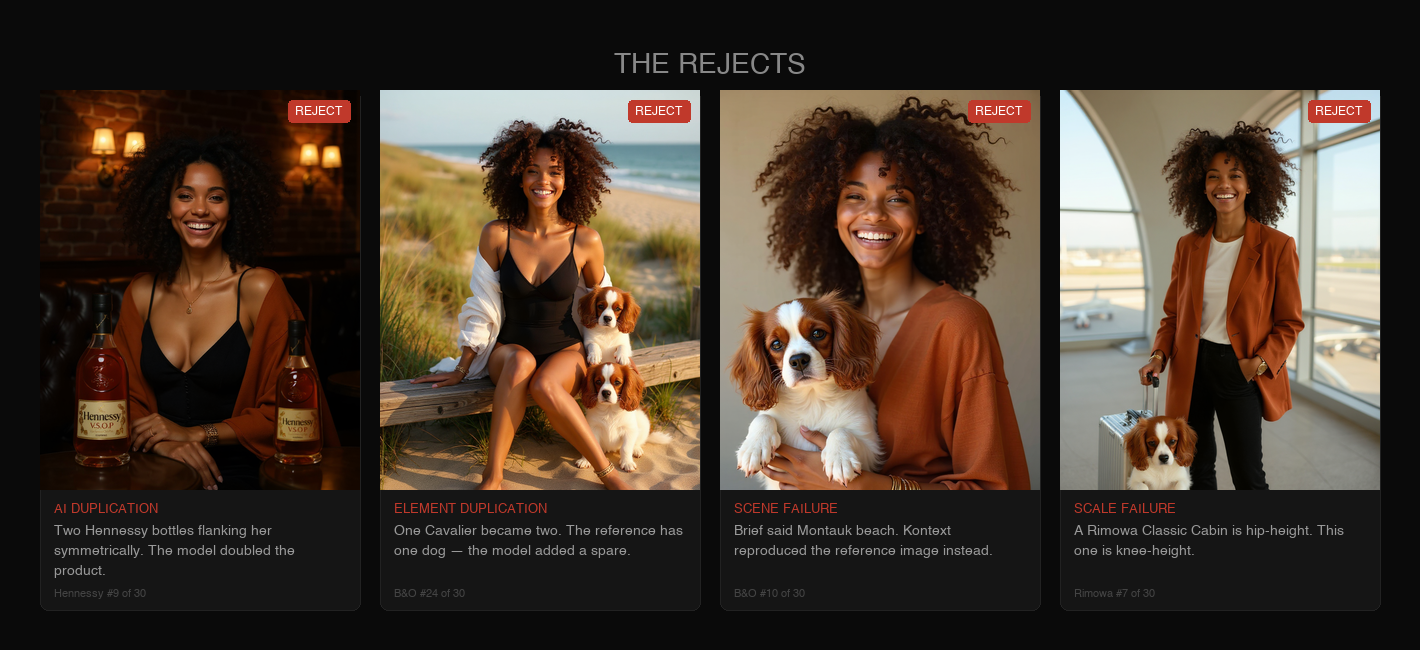

The failure modes: doubled products, extra dogs, ignored briefs, wrong scale.

Not every shot works. These are the failure modes you design around by generating 30 candidates, not 3.

The Cost and the Point

TOTAL PIPELINE COST

────────────────────────────────────────

Audition: $0.63 (25 Flux Dev)

Product prep: $0.08 (4x background removal)

Production: $4.80 (120 Kontext Multi)

────────────────────────────────────────

Total: $5.51

Images: 120 generated → 4 delivered

Time: 9 minutesThis replaces real work done by real people. Photographers, stylists, location scouts, content managers, the influencers themselves. A week of content that used to involve a team and a budget now costs $5 and a laptop.

The economics make it inevitable. When something costs $5 and takes 9 minutes, companies will do it. Many already are.

These aren’t portfolio-grade images. I’m a nerd, not an art director. But that’s the point — if a solo dev with Claude Code and a fal.ai key can produce this in an afternoon, imagine what a professional creative team could do with the same tools. I also wrote a non-technical version of this project for readers who don’t write code.

I’m not going to wrap this in a bow. The technology is here, it works, and it’s only getting cheaper. What happens next is a policy question, not a technical one.

Bonus: They Move Now

B&O — ocean breeze, the cardigan moves, the dog turns to camera. $0.53 total.

Just showing one here, and just as an animated GIF as that’s all Dev.to allows, but four still images became four video clips for $1.96. The influencer breathes now.

Jason Peterson

Jason Peterson